Question

Updated on

1 Nov 2018

- French (France)

-

English (US)

-

Spanish (Spain)

-

English (UK)

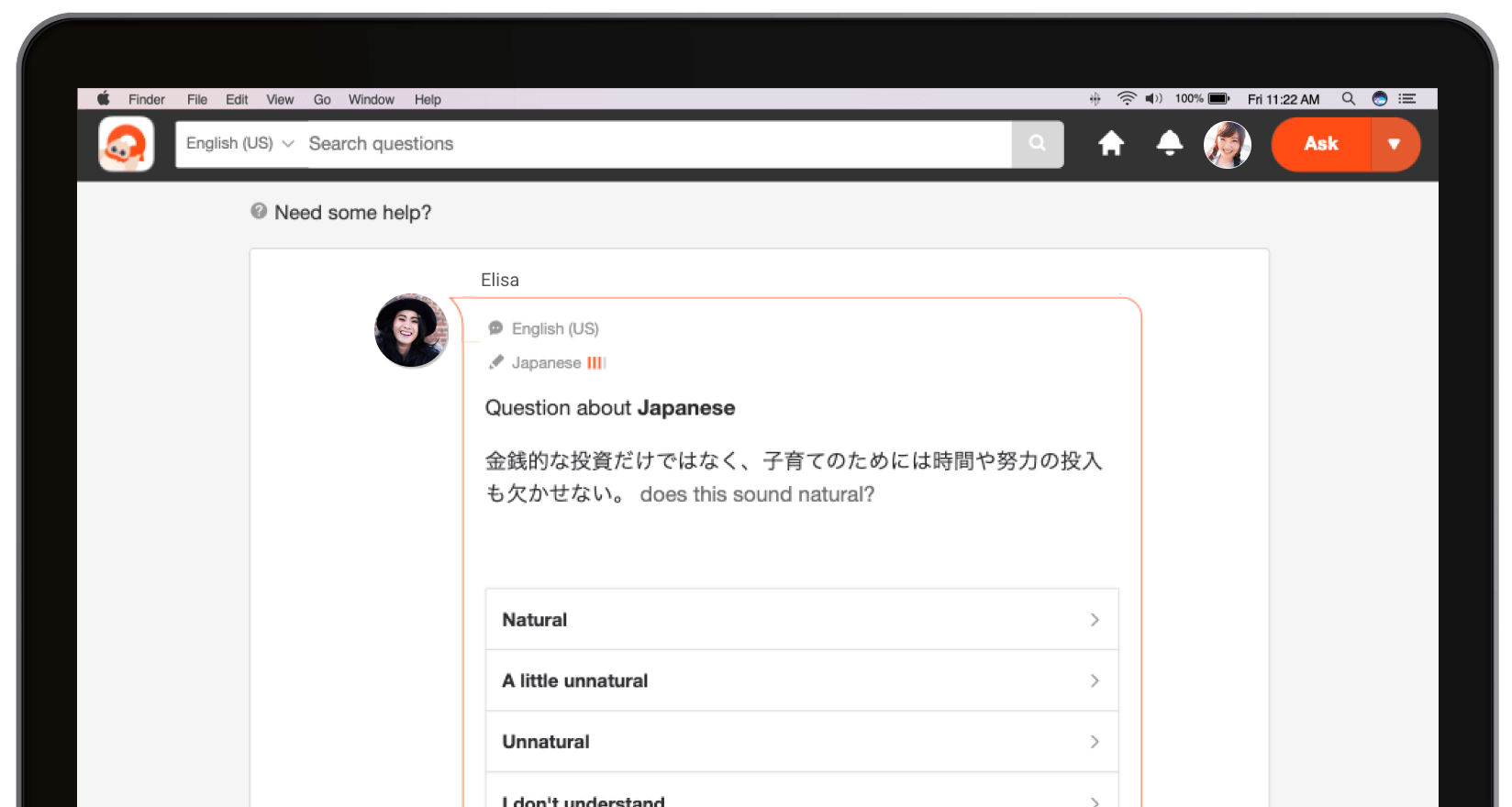

Question about English (US)

Western fiction is a famous genre of the American literature. Even though the western stories take place in the eighteenth or nineteenth century, and particularly during the Conquest of the West, it still forms part of the American culture, whether it be with books or movies Does this sound natural?

Western fiction is a famous genre of the American literature. Even though the western stories take place in the eighteenth or nineteenth century, and particularly during the Conquest of the West, it still forms part of the American culture, whether it be with books or movies Does this sound natural?

Answers

Read more comments

- English (US)

A little unnatural

of the American literature = *of American literature

Was this answer helpful?

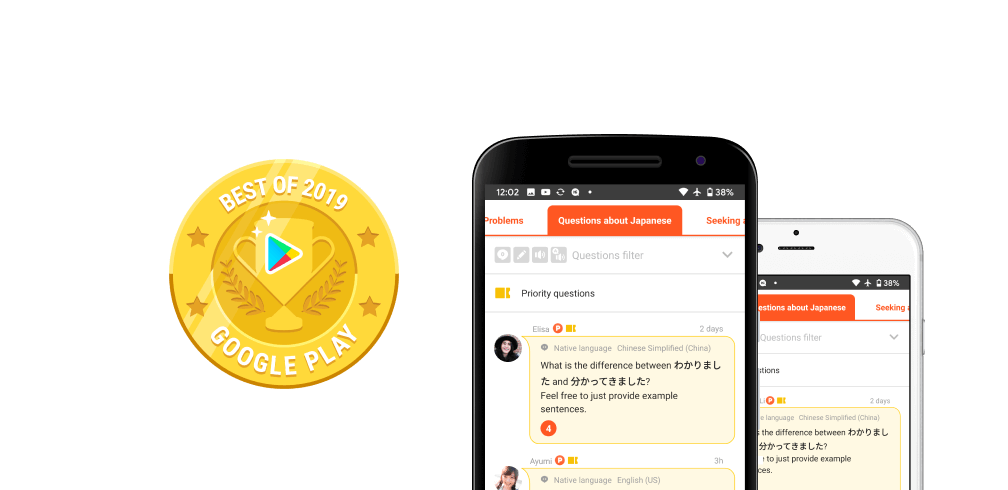

[News] Hey you! The one learning a language!

Do you know how to improve your language skills❓ All you have to do is have your writing corrected by a native speaker!

With HiNative, you can have your writing corrected by both native speakers and AI 📝✨.

With HiNative, you can have your writing corrected by both native speakers and AI 📝✨.

Sign up

Similar questions

Previous question/ Next question

Thank you! Rest assured your feedback will not be shown to other users.

Thank you very much! Your feedback is greatly appreciated.

Thank you very much! Your feedback is greatly appreciated.